Case Study - Secure Australian Generative AI

Driven by the success of OpenAI's ChatGPT, Australian businesses are increasingly looking for a secure, privacy-first way to test-drive generative AI.

- Client

- Proof of Concept

- Year

- Service

- Secure Generative AI

Overview

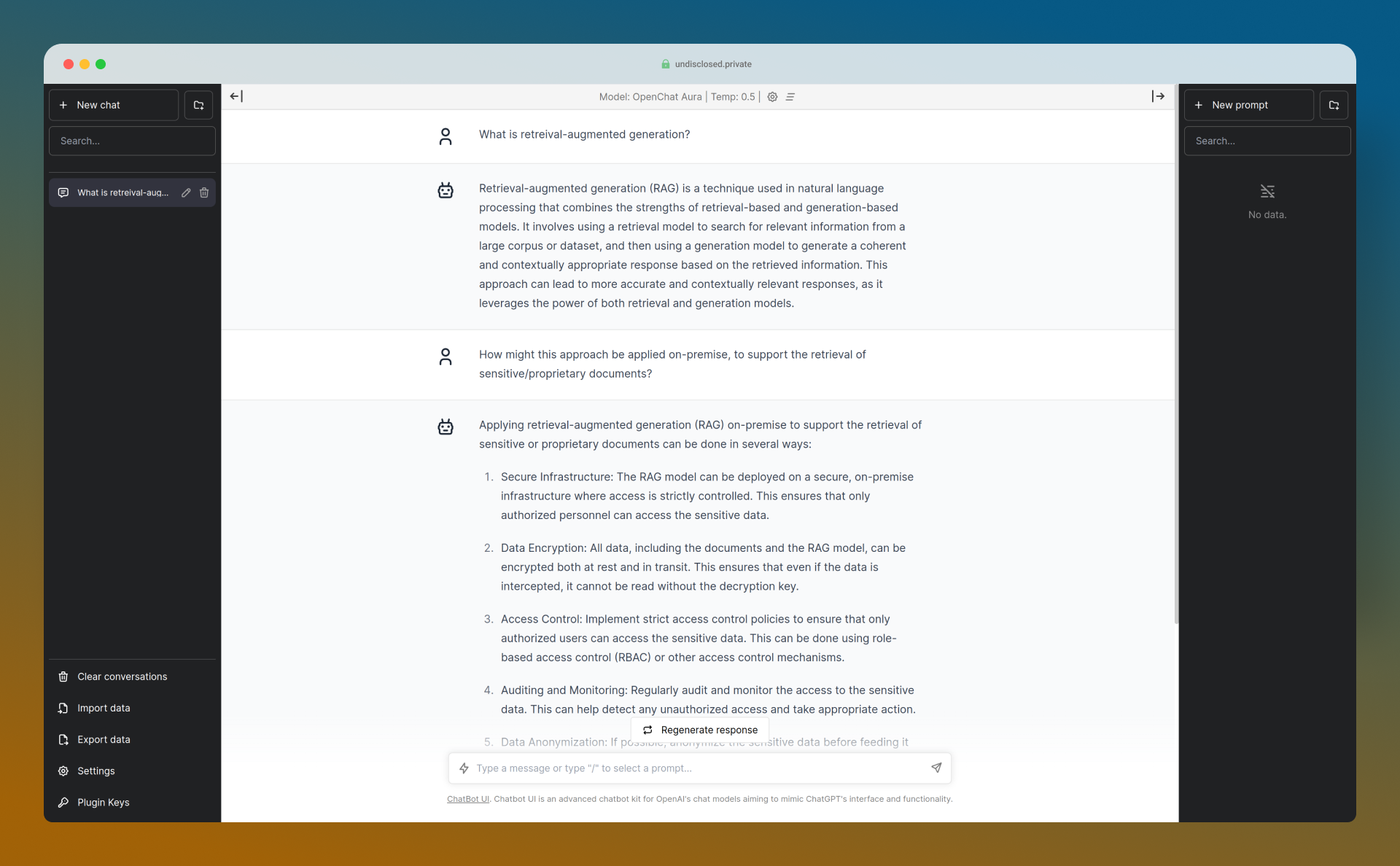

Stacktrace, Brisbane's leading generative AI consultancy, recently developed a Resource-Augmented-Generation (RAG) proof-of-concept for a prospective client who wishes to remain anonymous at this time. Their needs should be familiar to many, and we'll hereafter refer to them as Undisclosed.

Like many Australians, executives at Undisclosed see enormous potential in the generative AI ecosystem (led by ChatGPT), but they remain firmly committed to securing their data and remaining compliant with the Australian Privacy Principles, including the requirement that personally-identifiable data remain within Australian data-centers.

Recent internal-facing assessments had identified an opportunity to improve the consistency and availability of staff-facing training materials, and a need to free capacity across the team without increasing headcount.

After Stacktrace conducted a lightweight discovery process (delivered without physical site access), some key insights emerged.

- For enquiries originating with external-customers, recent-hires were excellent at resolving 80% of issues, but the long-tail were causing a bottleneck when issues needed to be escalated to more experienced staff.

- High-quality authoritative sources describing the appropriate business process (eg. supporting long-form consumer paperwork) were in-place, but were difficult to navigate without extensive domain familiarity.

While we're vocal advocates for applying Large Language Models to interesting NLP/NLU tasks like classification and recommendation systems, for this specific situation an internal-facing chat interface seemed an excellent fit.

With the target identified, work began to transform the corpus of training material (a mix of HTML, PDFs and Word documents spread across public-facing and intranet sources) into a vector representation to support granular semantic search.

Leveraging Stacktrace's Next.js starter-kit, and calling into Azure's Sydney-based OpenAI service, a lean proof-of-concept was created in a matter of weeks, with a clear pathway for extension and improvement both as new AI models become available.

What we did

- Azure OpenAI (GPT4)

- Resource-Augmented Generation (RAG)

- Vector Embeddings

- Semantic Search

- AWS Infrastructure

- Operations and Maintenance

- Generative AI

- Australian Cloud Infrastructure

- AWS & Azure OpenAI

- Time to MVP

- < 4 weeks

- Large Language Models

- Fine-tuned